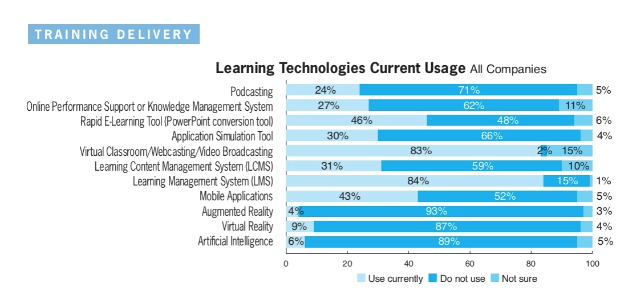

Training Magazine recently published their 2020 Training Industry Report.

This graph stood out …

According to the survey, only 6% of organizations are using artificial intelligence to deliver training. 89% stated they are not using AI. 5% aren’t sure.

Hmmmmm …

Almost everyone has an LMS. One quarter of companies have figured out audio-only training. One in ten has implemented VR despite the logistical challenges and content development costs. But only 6% are using AI? That doesn’t sound right.

- When does L&D consider themselves to be “officially” using AI?

- Which personas within workplace learning must be applying AI-enabled technology as part of their roles to constitute official use?

- How will this perspective influence AI adoption, governance and value within L&D?

We use AI every day, whether we think about it or not. If you have a smartphone, you use AI. If you shop online, you use AI. If you stream digital entertainment, you use AI. However, we are typically passive users. We provide the data. The AI does the work behind the scenes. We reap the benefits (sometimes). You don’t “work with AI” in Google Maps in the way you “work with video” in Adobe Premiere, but it’s still a fundamental part of the user experience.

L&D pros also use AI every day, whether we think about it or not. Smart Compose helps us write more accurately and efficiently. Designer helps us build better slides. Zoom automatically transcribes our online meetings so we can more easily access the information we need later. Shouldn’t these common activities count as “using AI” in our work?

Then there’s the most commonly-used learning technology, the LMS/LXP. I don’t have official research on this, but your LMS/LXP probably applies at least basic AI to power the user experience. Does it auto-tag content? Does it transcribe video? Does it make content recommendations? It may not be super powerful or impactful, but most established learning platforms now use AI.

The AI applications I’ve mentioned so far are software-enabled, meaning they don’t require a lot of data to execute. The same is true for AI-enabled chat, translation and authoring tools, which use source input and natural language understanding technology to build conversational responses and training content. However, these are more visible forms of L&D AI. You actively use the tool to generate a specific output.

Finally, there’s software-executed AI. These applications require significant information architecture to generate the desired output. Examples include learning impact analysis, skill matching, personalization and coaching. You can’t apply these concepts without a dedicated AI project and everything that comes with it (governance, privacy, risk, expertise, etc.).

So where’s the line? When should an L&D team consider themselves as active AI users? And, as AI becomes an increasingly foundational and ubiquitous part of our everyday technology, when should you start having serious AI conversations, even if you haven’t made a conscious decision to become an AI-enabled learning function?

All things considered, that 6% should be more like 60%.